Prompt Engineering: Unlocking the Power of Generative AI

Mathew Parackal

Dr Mathew Parackal

Dr Mathew Parackal Abstract

Generative Artificial Intelligence (Gen AI) has transformed human-machine interactions, making AI-generated content

relevant and applicable across various industries. One critical factor influencing the effective use of Gen AI is

Prompt Engineering, the structured design of inputs to produce optimal AI-generated responses.

This article explores Prompt Engineering, covering its fundamental components, methods to enhance AI interactions.

It elaborates the key prompting techniques along with their applications. The article concludes by emphasising the

importance of refining Prompt Engineering strategies to ensure AI-generated content remains accurate, reliable, and

ethically responsible.

This article was written using the principles of Prompt Engineering. The author structured the sections into prompts following the method outlined in the article. The manuscript was developed using Copilot. It then underwent a review for accuracy and was further refined using Claude.

Introduction

The rise of Generative Artificial Intelligence (Gen AI) has revolutionised how humans interact with machines.

One of the critical aspects of maximising the effectiveness of Gen AI is prompt engineering, which involves

crafting precise and structured inputs to elicit optimal responses from AI models. This emerging field is

increasingly gaining attention from researchers and industries due to its ability to improve AI performance,

enhance user experience, and ensure outputs align with intended objectives (Brown et al., 2020).

This article explores the principles of prompt engineering, highlighting its essential components, methodologies, and significance in AI interactions. It examines different prompting techniques, such as Retrieval Augmented Generation (RAG), Chain-of-Thought (COT), ReAct (Reason + Act), and Directional Stimulus Prompting (DSP), and their applications. Furthermore, it discusses how external knowledge integration through RAG can enhance prompt-driven AI responses (Lewis et al., 2020). Finally, the article concludes with insights into the future of prompt engineering and its growing impact on AI-driven solutions.

Understanding Prompt Engineering

Prompt engineering is the process of formulating inputs that guide a Gen AI model toward generating relevant, accurate, and

meaningful responses. Unlike simple keyword-based queries, effective prompt design requires knowledge of AI behaviour,

linguistic structuring, and an iterative refinement process to achieve high-quality interactions (Wei et al., 2022).

The process involves defining clear instructions, providing context, specifying inputs, and setting expectations for the

output. These elements play a crucial role in ensuring AI models produce coherent, logical, and domain-specific responses.

Key Elements of Prompt Engineering

Instruction: The instruction tells the Gen AI what is required. It provides a clear direction on what is required.

Prompt: Design a social media post that encourages people to participate in a beach clean-up event.

Context: The context provides background information or details surrounding the task. This element helps to shape the

response by offering a scenario or setting.

Prompt: The beach clean-up event is part of a larger campaign to raise awareness about ocean pollution.

The campaign is targeting young adults who are active on social media and interested in environmental conservation.

Inputs: Inputs are the specific data, requirements, or components that need to be included in the response.

These can be keywords, themes, images, or any other material that should be considered or incorporated.

Prompt: Use the hashtags #CleanOceans #BeachHeroes in the post. Include a striking image of a polluted beach contrasted

with a clean beach. The tone should be inspiring and action-oriented.

Expected Output: This element describes the desired result or the form that the response should take. It sets the criteria

for what constitutes a successful completion of the instruction.

Prompt: The expected output is a visually appealing Instagram post that includes the specified hashtags, the contrasting images

of the beaches, and a compelling call-to-action that motivates followers to join the beach clean-up event.

Types of Prompting Techniques

Prompting techniques can broadly be classified into four types based on their purpose and functionality.

Retrieval Augmented Generation (RAG)

RAG is a knowledge enhancing prompt. The focus is on improving the factual accuracy of AI-generated responses by incorporating

external knowledge sources. For example, a legal AI assistant utilising RAG can retrieve updated case law before

offering legal advice, ensuring reliability and credibility in its response. RAG achieves this by combining a

generative AI model with external information retrieval to improve response accuracy. Unlike traditional AI models

that rely solely on pre-trained knowledge, RAG first retrieves relevant documents from external databases

(e.g., arXiv, Google Scholar, proprietary data) and then generates responses based on the retrieved content.

This approach enhances factual reliability, making it particularly useful in academic, legal, and real-time

information retrieval applications (Lewis et al., 2020).

Steps in the RAG Process

1. User Query Input: The user provides a query, e.g., "What are the latest advancements in quantum computing?"

2. Query Reformulation: The system refines the query to optimize search efficiency.

3. Document Retrieval: The model retrieves relevant information from external databases or user-provided documents.

4. Document Ranking & Filtering: Retrieved documents are ranked based on relevance.

5. Response Generation: The AI synthesizes the retrieved information into a coherent response.

6. Final Output: The response is delivered to the user, incorporating citations where applicable.

Chain-of-Thought (COT) Prompting

COT is a reasoning-based prompt, enhancing logical reasoning and problem-solving abilities. They are achieved by

guiding AI through a structured analytical process. A practical application of COT is in educational AI tutors,

where a model breaks down a complex calculus problem into simpler steps to facilitate student understanding.

This approach is effective in tasks requiring mathematical problem-solving, logical reasoning, and

decision-making (Wei et al., 2022).

Steps in the COT Process

1. User Query Input: The user submits a question requiring logical reasoning, e.g., "A train travels 60 mph for 3 hours,

then 40 mph for 2 hours. What is the total distance?"

2. Query Interpretation and Decomposition: The model breaks down the problem into smaller steps.

3. Step-by-Step Reasoning Execution: The AI computes intermediate results:

o First phase: 60 x 3 = 180 miles

o Second phase: 40 x 2 = 80 miles

o Total distance: 180 + 80 = 260 miles

4. (Optional) RAG Integration: If external knowledge is required, the model retrieves relevant information before reasoning.

5. Final Answer Generation: The response is structured logically and presented clearly.

ReAct (Reason + Act) Prompting

ReAct are action-oriented prompts. They enable AI models to interact dynamically with data sources, refining their

outputs based on real-time retrieval. This technique is particularly useful in research applications, where an

AI assistant can browse the web for the latest market trends before generating a summarised analysis (Yao et al., 2022).

Steps in the ReAct Process

1. Define the Problem: The user asks a question requiring research, e.g., "Find the best-rated electric cars of 2024."

2. Encourage Reasoning: The model outlines the key factors: battery life, affordability, reviews.

3. Define Action Criteria: The AI determines where to retrieve the information (e.g., review sites, manufacturer websites).

4. Provide Hypothetical Scenarios: The model tests different retrieval sources to assess reliability.

5. Evaluate the Process: AI reviews retrieved data and selects the most relevant insights.

6. Integrate RAG (if needed): The AI fetches real-time data for enhanced accuracy.

7. Generate the Final Response: The AI summarizes the findings and presents the best options.

Directional Stimulus Prompting (DSP)

DSP prompts are designed to get a structured response from the AI model. They ensure the AI-generated content

follows a predefined format to maintain coherence and organisation. A prime example of DSP is a financial reporting

AI that generates structured company earnings summaries based on user-provided data, ensuring consistency and clarity

in financial documentation (OpenAI, 2023).

Steps in the DSP Process

1. User Input & Problem Definition -- The user provides a structured request, e.g., "Summarize recent developments in AI ethics."

2. Stimulus Direction Definition -- The model is given key areas to focus on, e.g., fairness, accountability, transparency.

3. Contextual Expansion & Understanding -- AI interprets how the stimulus fits within the broader context.

4. Decision Point: External Information Needed?

o Yes ? RAG fetches relevant documents.

o No ? AI proceeds with its pre-trained knowledge.

5. Response Structuring & Generation -- AI formulates a structured response based on the stimulus.

6. Review & Refinement -- The response is checked for alignment with the structured prompt.

7. Final Output Delivery -- The structured response is provided to the user with citations where applicable.

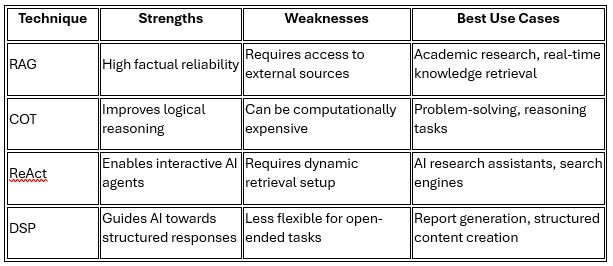

The four types of prompts can be used by themselves or in combination to produce the best desired outcomes. Understanding their strengths and weaknesses (see Table 1) will be useful to decide when and how to use them.

Table 1: Strengths and Weaknesses of the Prompt Technique

Ethical Considerations in Prompt Engineering

As AI models become more sophisticated, ethical concerns surrounding prompt engineering have gained prominence. One key issue is bias in AI-generated outputs. Since AI models learn from vast datasets, they can inadvertently perpetuate biases present in their training data, leading to unfair or discriminatory responses (Bender et al., 2021). To mitigate this, researchers advocate for continuous auditing of AI-generated content and diverse, representative training datasets.

Another significant ethical consideration is misinformation. Poorly crafted prompts or over-reliance on AI responses without verification can result in the spread of false or misleading information (Floridi & Chiriatti, 2020). This is particularly concerning in areas like healthcare and law, where misinformation can have severe consequences. Implementing mechanisms for fact-checking and integrating external sources like RAG can help reduce this risk.

Furthermore, privacy concerns arise when AI models interact with sensitive user data. The improper structuring of prompts could lead to AI inadvertently revealing confidential information or being manipulated into exposing confidential data (Brundage et al., 2020). To address this, strict regulatory frameworks and AI safety protocols must be incorporated to ensure compliance with data protection laws, such as GDPR.

Finally, there is the issue of human dependency on AI-generated content. While prompt engineering enhances efficiency, excessive reliance on AI can diminish human critical thinking and problem-solving skills (Dignum, 2019). To counter this, AI should be used as a supplementary tool rather than a replacement for human expertise.

Future Directions

The future of prompt engineering lies in refining AI models to better understand context, intent, and nuance. One promising direction is multi-modal prompting, where AI can process and generate responses across multiple data formats such as text, images, and audio (Bommasani et al., 2021). Another area of development is self-improving prompt frameworks, where AI systems learn from user feedback to refine and optimise prompts dynamically.

Additionally, integrating prompt engineering with explainable AI (XAI) techniques will help enhance transparency in AI decision-making processes, making outputs more interpretable and trustworthy (Miller, 2019). Research into ethical AI frameworks will also continue to be essential in ensuring AI-generated content aligns with societal and regulatory expectations.

Conclusion

This article has explored the fundamental principles of prompt engineering and its significance in optimising AI interactions. By structuring prompts effectively, users can enhance the reliability, accuracy, and relevance of AI-generated responses. The discussion covered key elements of prompt engineering, various prompting techniques, and their applications in different industries. Additionally, it addressed ethical concerns related to AI bias, misinformation, privacy, and human dependency. Future advancements in AI, such as multi-modal prompting, self-improving AI models, and explainable AI frameworks, will continue to shape the field of prompt engineering. As AI systems become more sophisticated, research and regulatory efforts will be crucial in ensuring ethical and transparent AI interactions.

References

Bender, E. M., Gebru, T., McMillan-Major, A., & Shmitchell, S. (2021). On the dangers of stochastic parrots:

Can language models be too big? Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency,

610--623.

Bommasani, R., Hudson, D. A., Adeli, E., et al. (2021). On the opportunities and risks of foundation models.

arXiv preprint arXiv:2108.07258.

Brundage, M., Avin, S., Wang, J., et al. (2020). Toward trustworthy AI development: Mechanisms for supporting

verifiable claims. arXiv preprint arXiv:2004.07213.

Brown, T., Mann, B., Ryder, N., et al. (2020). Language models are few-shot learners. Advances in Neural Information

Processing Systems, 33, 1877--1901.

Dignum, V. (2019). Responsible Artificial Intelligence: Developing Ethical AI Systems. Springer.

Floridi, L., & Chiriatti, M. (2020). GPT-3: Its nature, scope, limits, and consequences. Minds and Machines, 30(4), 681--694.

Lewis, P., Perez, E., Piktus, A., et al. (2020). Retrieval-augmented generation for knowledge-intensive NLP tasks. Advances in

Neural Information Processing Systems, 33, 9459--9474.

Miller, T. (2019). Explanation in artificial intelligence: Insights from the social sciences. Artificial Intelligence,

267, 1--38.

OpenAI. (2023). Improving AI response quality with Directional Stimulus Prompting (DSP). Technical Report.

Wei, J., Wang, X., Schuurmans, D., et al. (2022). Chain-of-thought prompting elicits reasoning in large language models.

arXiv preprint arXiv:2201.11903.

Yao, S., Zhao, J., Yu, D., et al. (2022). ReAct: Synergizing reasoning and acting in language models. arXiv

preprint arXiv:2210.03629.

- © Thought Leader